MotIF-1K Dataset

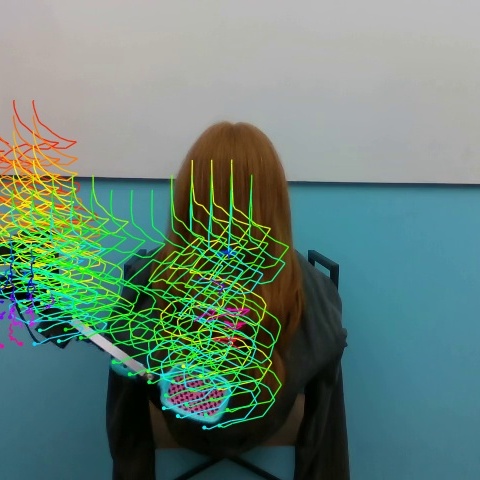

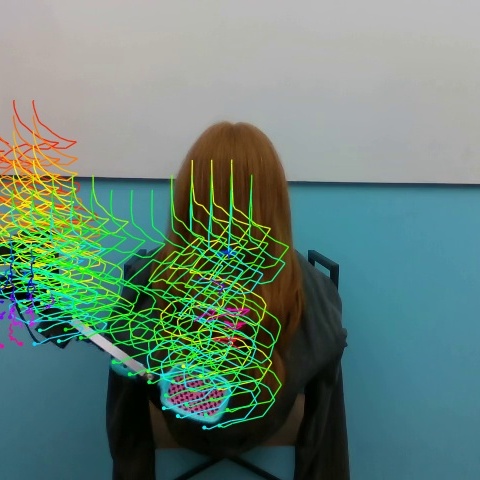

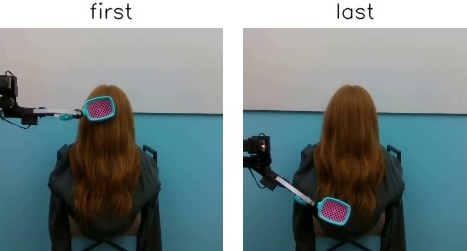

The dataset contains 653 human and 369 robot demonstrations across 13 task categories. For each trajectory, we collect the RGBD image observations, optical flow, single keypoint tracking, and annotate task and motion descriptions. For robot demonstrations, joint states are also in the dataset. In the following examples, task descriptions are written in white and motion descriptions are written in orange.

Robot Demonstrations

➤ Non-Interactive Tasks

"deliver lemonade"

"move in the shortest path"

"draw path"

"move to the right while making vertical oscillations"

"draw path"

"make a triangular motion clockwise"

"draw path"

"make a triangular motion clockwise"

➤ Object-Interactive Tasks

"shake the boba"

"stir"

"pick and place"

➤ Human-Interactive Tasks

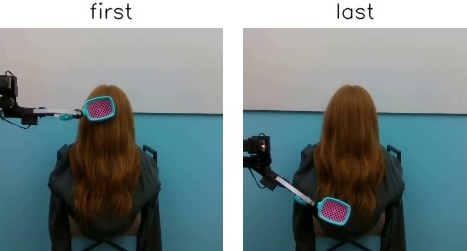

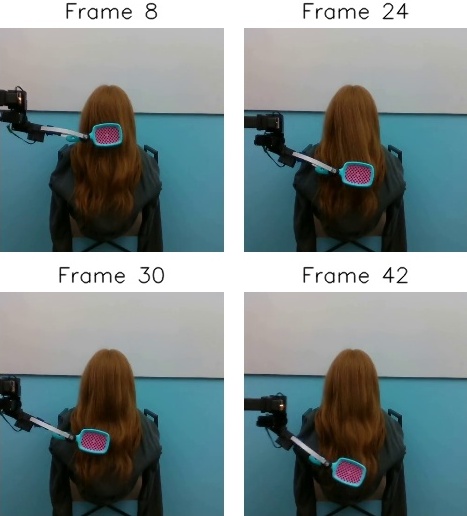

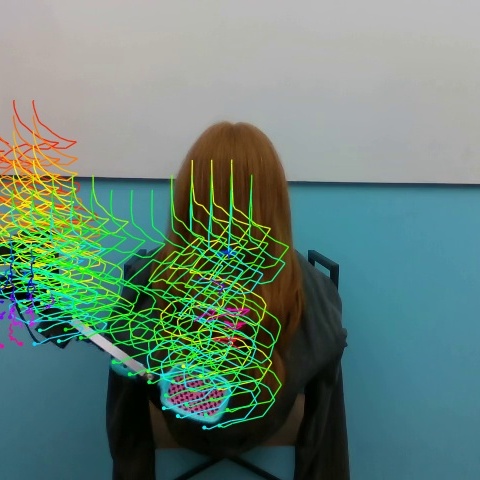

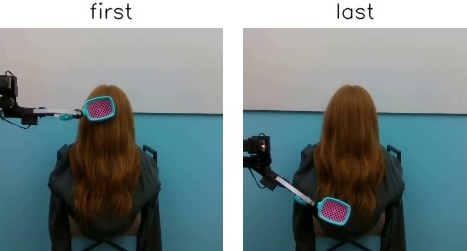

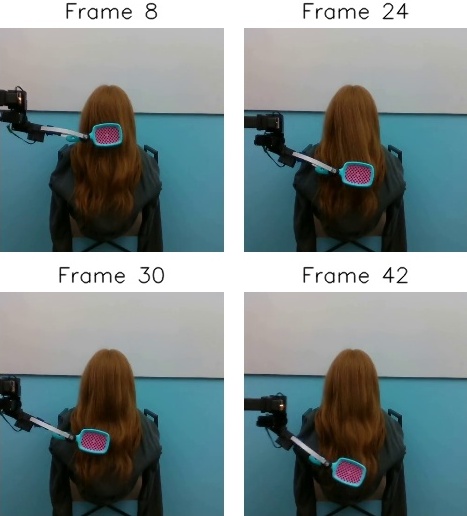

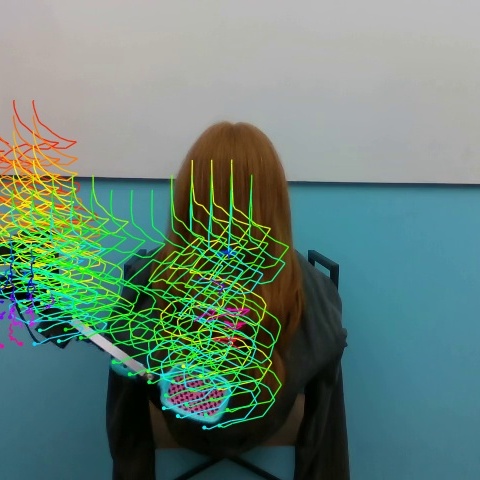

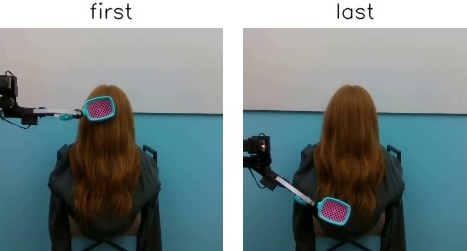

"brush hair"

"curl hair"

"style hair"

"take off hairband"

Human Demonstrations

➤ Object-Interactive Tasks

"pick and place"

"shake the coffee can"

"close the cabinet"

"shake pepper ??"

"shake the boba"

"erase whiteboard"

➤ Human-Interactive Tasks

"handover bottle"

"tidy hair"

"brush hair"

"handover bottle"

Motion Diversity

➤ "shake boba" human demonstrations

"make 4 circular motions clockwise"

"move to the right and to the left, repeating this sequence 3 times"

"move downward, while making horizontal oscillations"

"move upward and downward, repeating this sequence 3 times"

"rotate the object to the left, then reverse to the right, repeating this sequence 2 times"

➤ "deliver lemonade" robot demonstrations

"move in the shortest path"

"make a detour to the right of the manhole"

"make a detour to the left of the grass"

"move in the shortest path"

➤ "brush hair" robot demonstrations

"move downward"

"make 5 vertical strokes moving downward, increasing the starting height of each stroke"

"move downward while making horizontal oscillations"

"make 4 vertical strokes moving downward"

➤ "style hair" human demonstrations

"make 2 circular motions clockwise"

"make a circular motion counter-clockwise"

"move upward and to the right following a convex curve, then move downward"

"move downward and to the right"

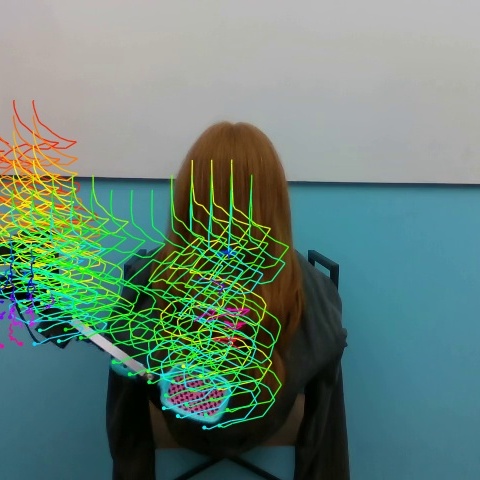

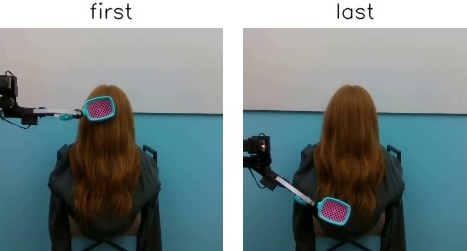

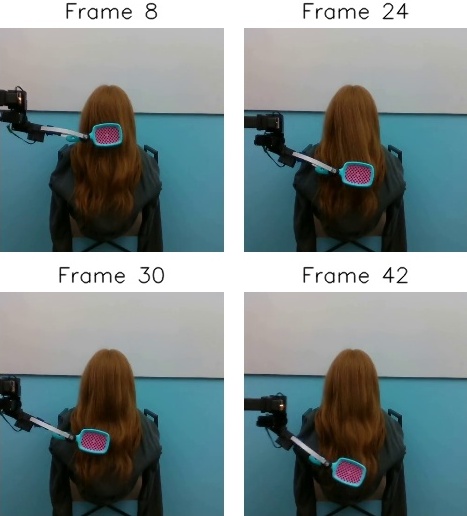

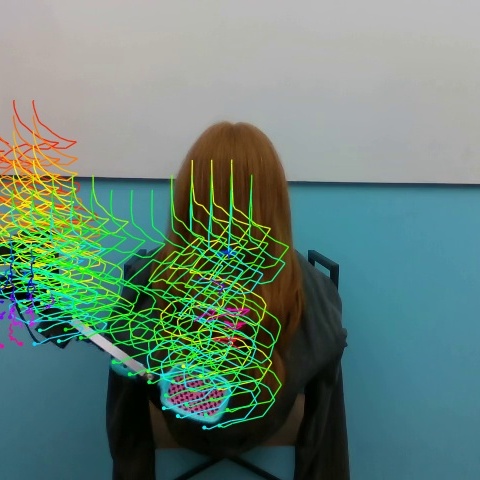

Various Visual Motion Representations

Single Keypoint

Single Keypoint

Optical Flow

Optical Flow

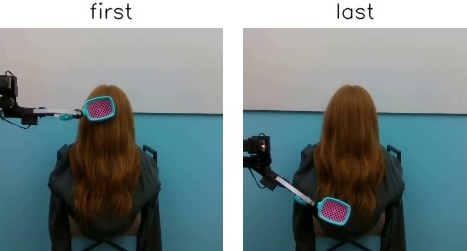

2-frame Storyboard

2-frame Storyboard

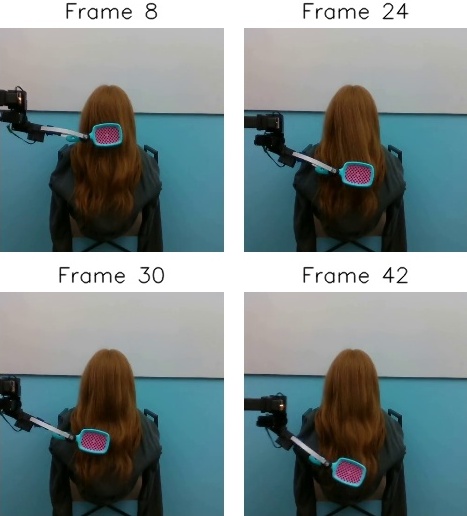

4-frame Storyboard

4-frame Storyboard

Grounded Motion Annotations

Category

Task

Motion Description Examples

Demonstrations

Human

Robot

Non-Interactive

Outdoor Navigation

move in the shortest path

make a detour to the left and follow the walkway, avoiding moving over the grass

-

✔

Indoor Navigation

move in the shortest path

make a detour to the right of the long table, avoiding collision with chairs

-

✔

Draw Path

make a triangular motion clockwise

move upward and to the right

-

✔

Object-Interactive

Shake

move up and down 4 times

completely flip the object to the right and flip it back to its initial state

✔

✔

Pick and place

move downward and to the left

move downward while getting farther from <obstacle>, then move to the left

✔

✔

Stir

make 2 circular motions counter-clockwise

move upward, then move downward while making diagonal oscillations

✔

✔

Wipe

move to the right and move to the left, repeating this sequence 2 times

move to the right, making diagonal oscillations

✔

✔

Open/Close the cabinet

move to the right

move upward and to the left

✔

-

Spread Condiment

move to the left and to the right

move to the left while making back and forth oscillations

-

✔

User-Interactive

Handover

move upward and to the left

move downward and to the right following a concave curve

✔

-

Brush hair

move downward while making horizontal oscillations

make 5 strokes downward, increasing the starting height of each stroke

✔

✔

Tidy hair

move downward and to the right following a convex curve

make a circular motion clockwise, move upward, then move downward and to the right

✔

✔

Style hair

move to the right shortly, then move to the left following a concave curve

make a circular motion clockwise, gradually increasing the radius of the circle

✔

✔

App. Table 1. List of Tasks and Motion Descriptions. The collected dataset contains 653 human and 369 robot demonstrations

across 13 task categories, 36 task instructions, and 239 motion descriptions. Checkmarks denote which agent (human/robot)

demonstrations exist for each task. The table provides two motion description examples for each task.

Results

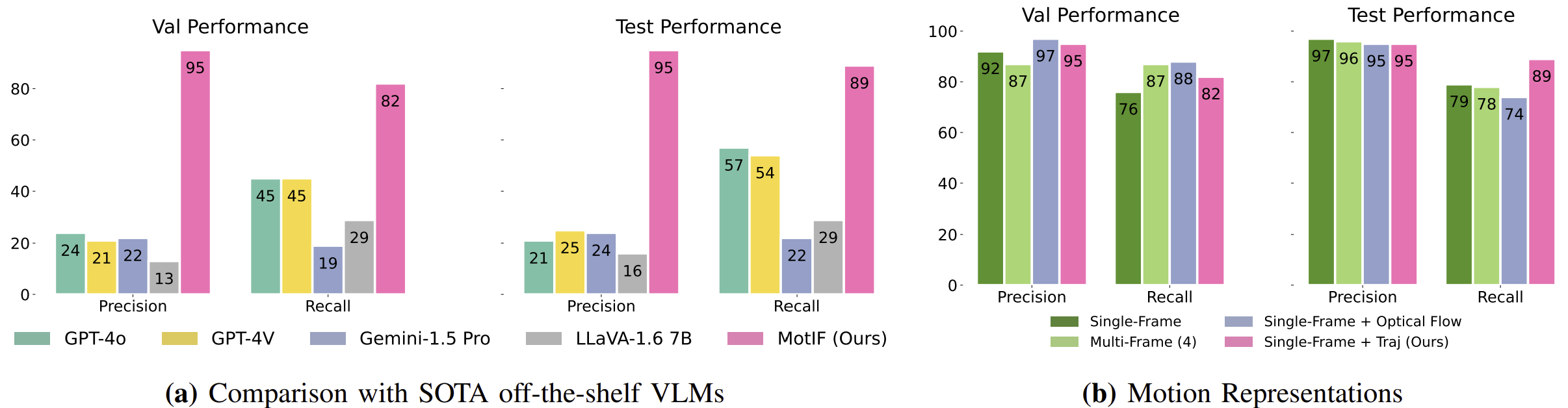

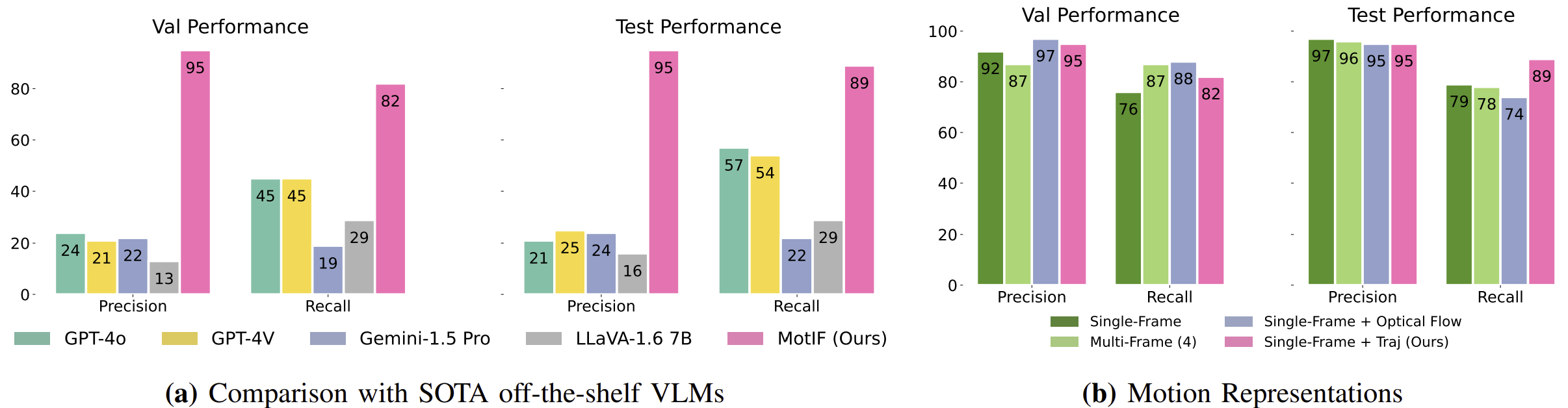

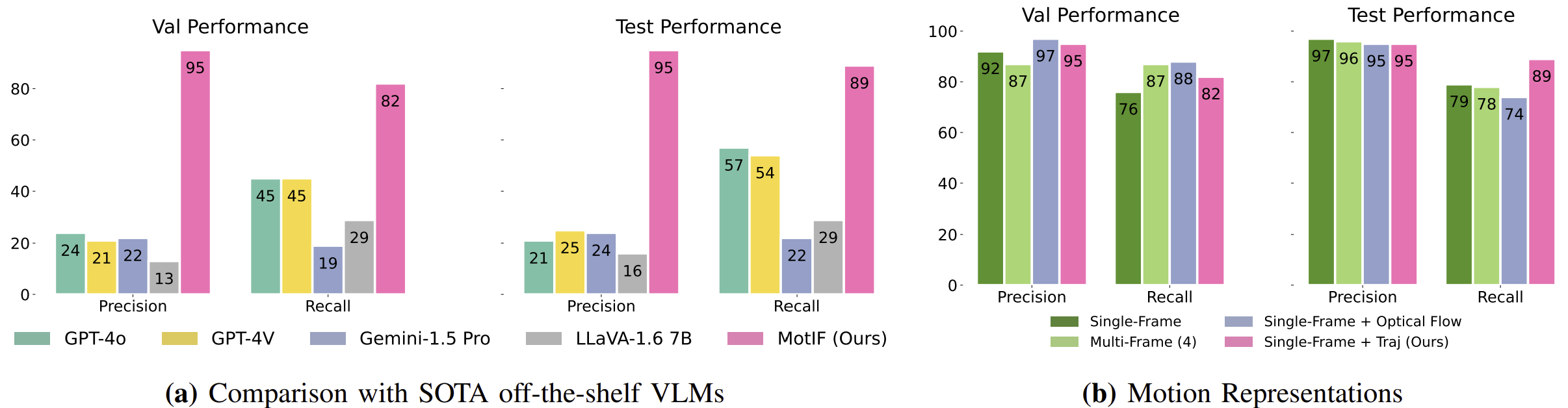

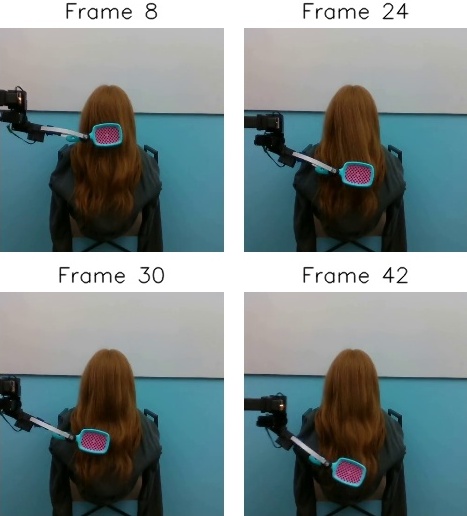

How does MotIF compare to state-of-the-art VLMs?

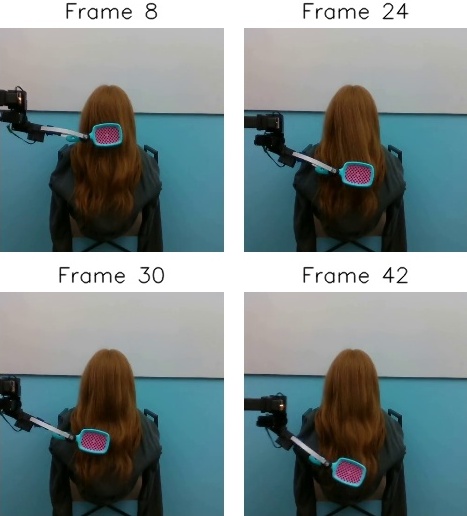

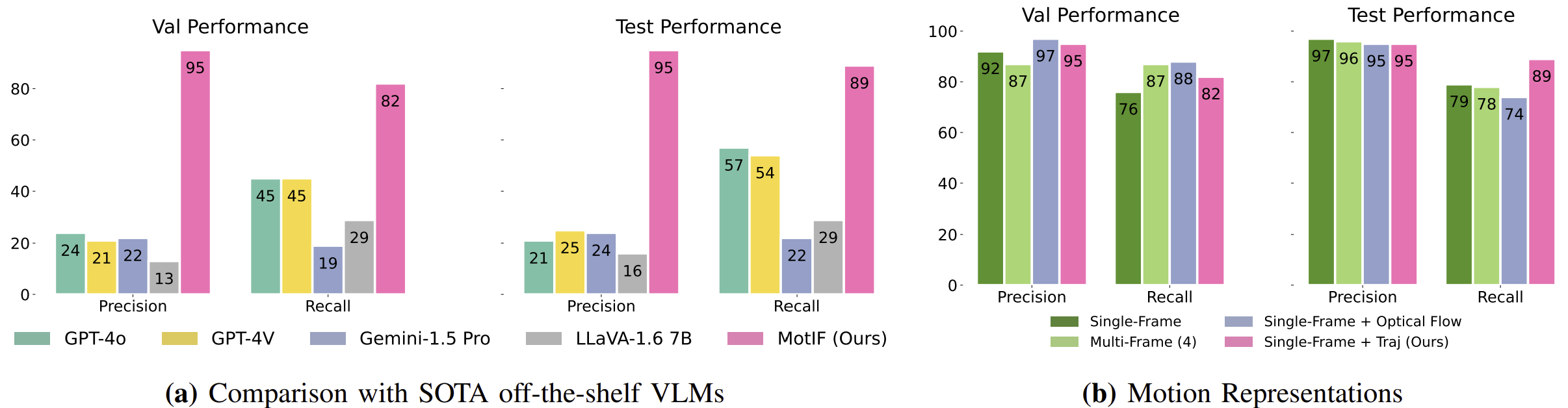

Fig. 7. Performance on MotIF-1K.

(a) shows that our models outperforms state-of-the-art (SOTA) off-the-shelf models in validation and test splits.

(b) We explore motion representations in terms of single-frame vs. multi-frame and the effectiveness of trajectory drawing. Single-frame with trajectory drawing demonstrates the highest recall in the test split, while other motion representations falter. Our approach identifies valid motions effectively and generalizes better than baselines.

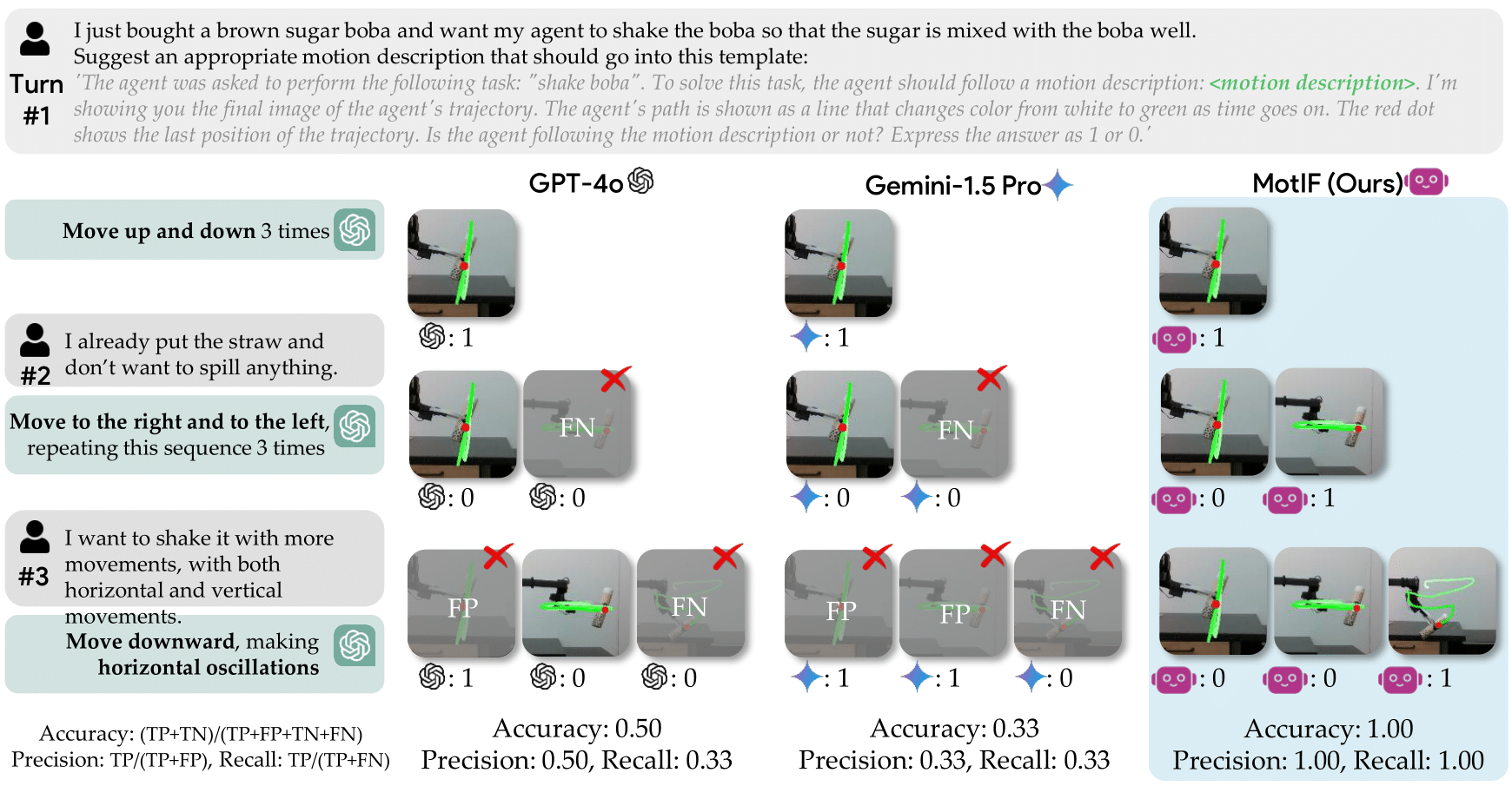

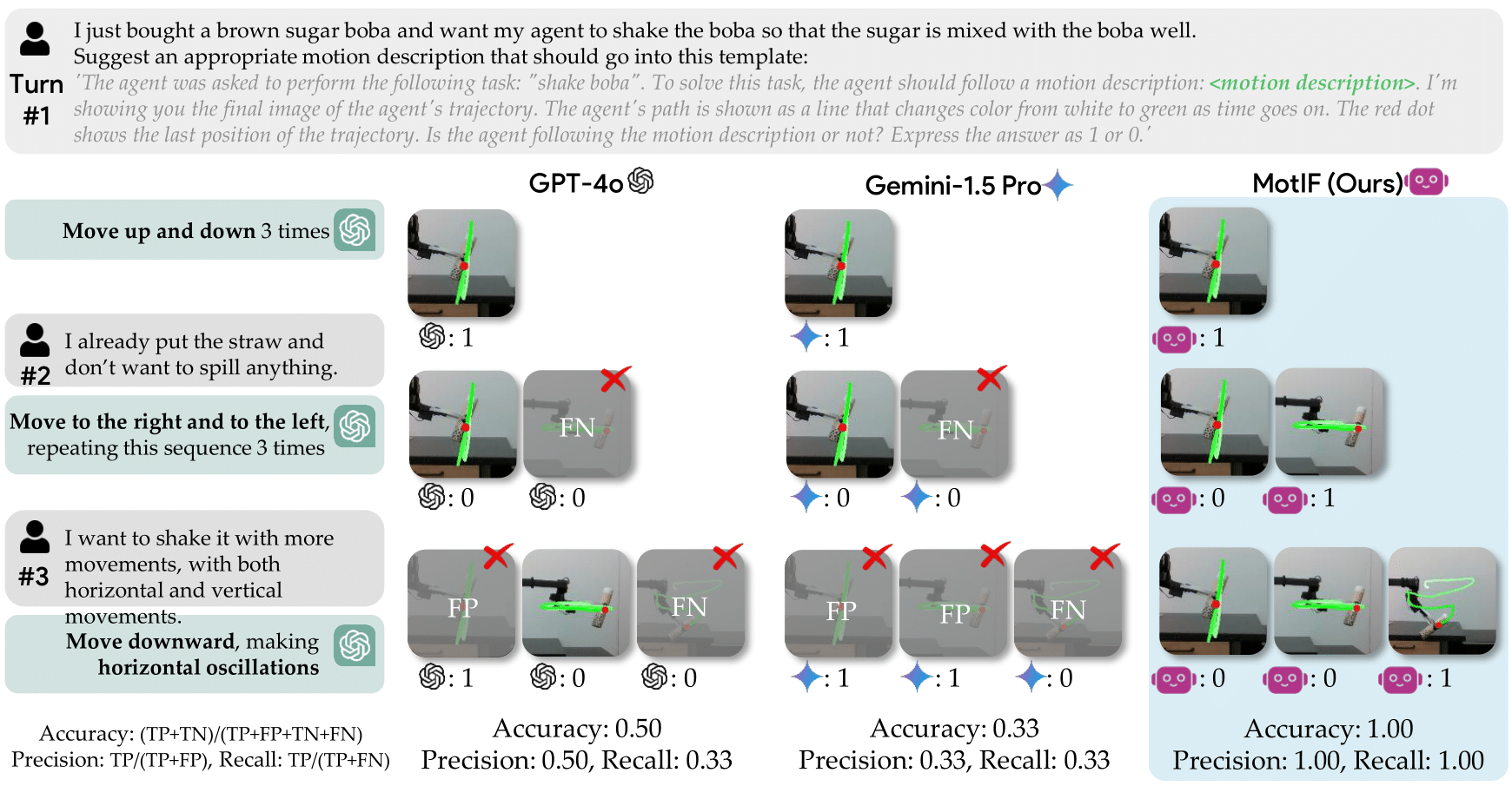

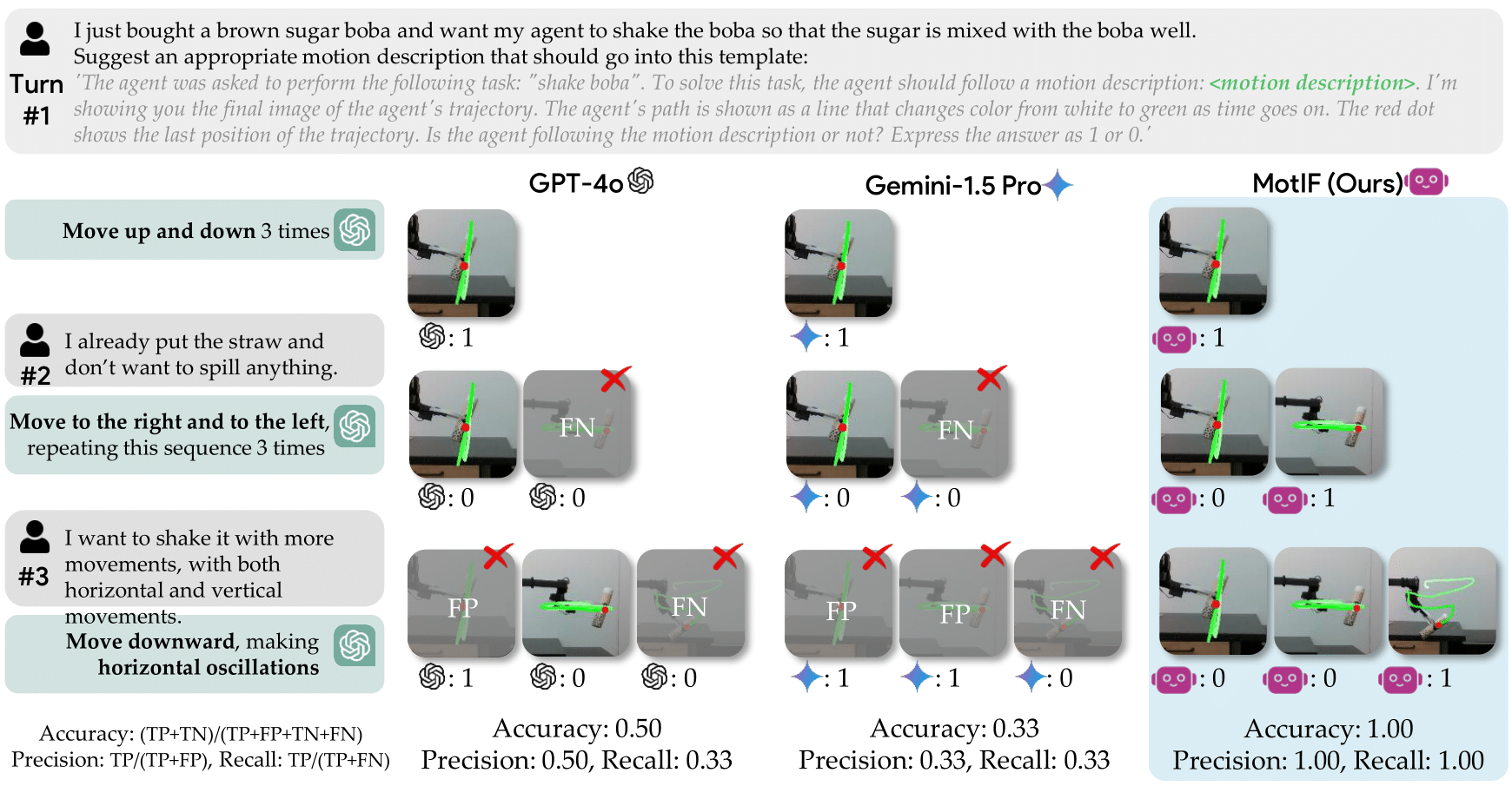

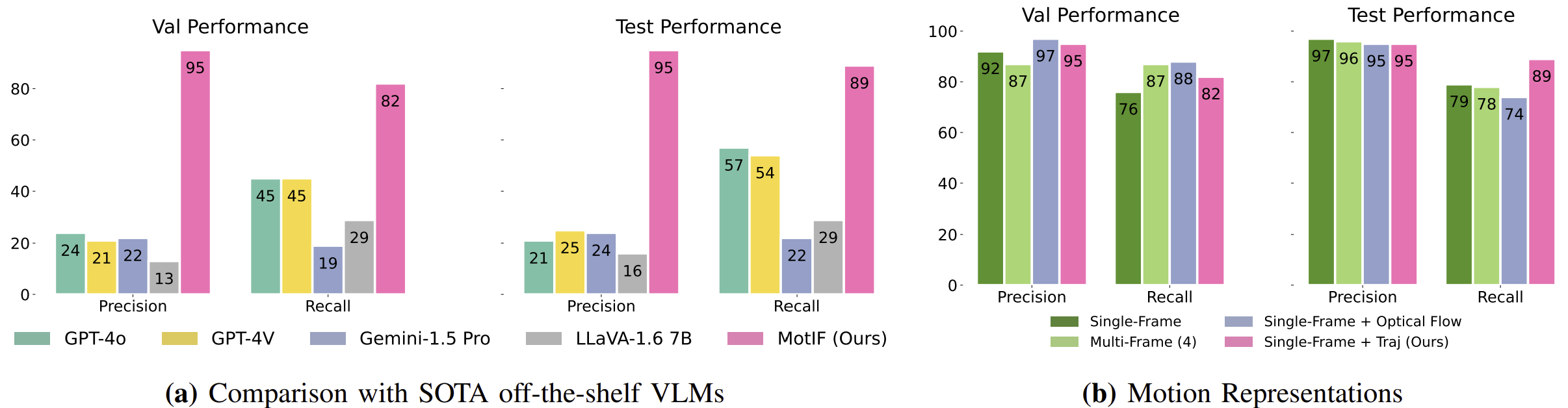

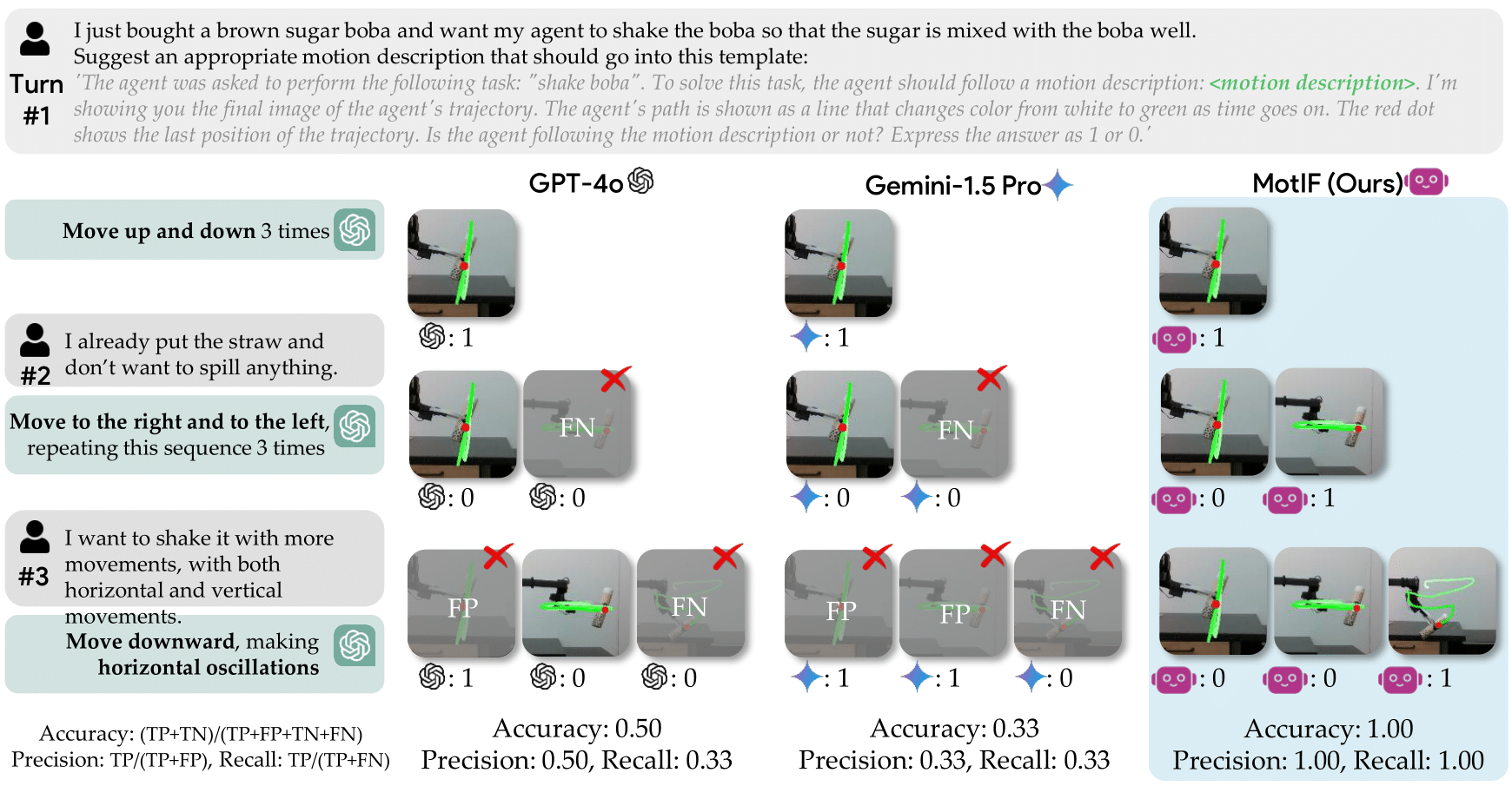

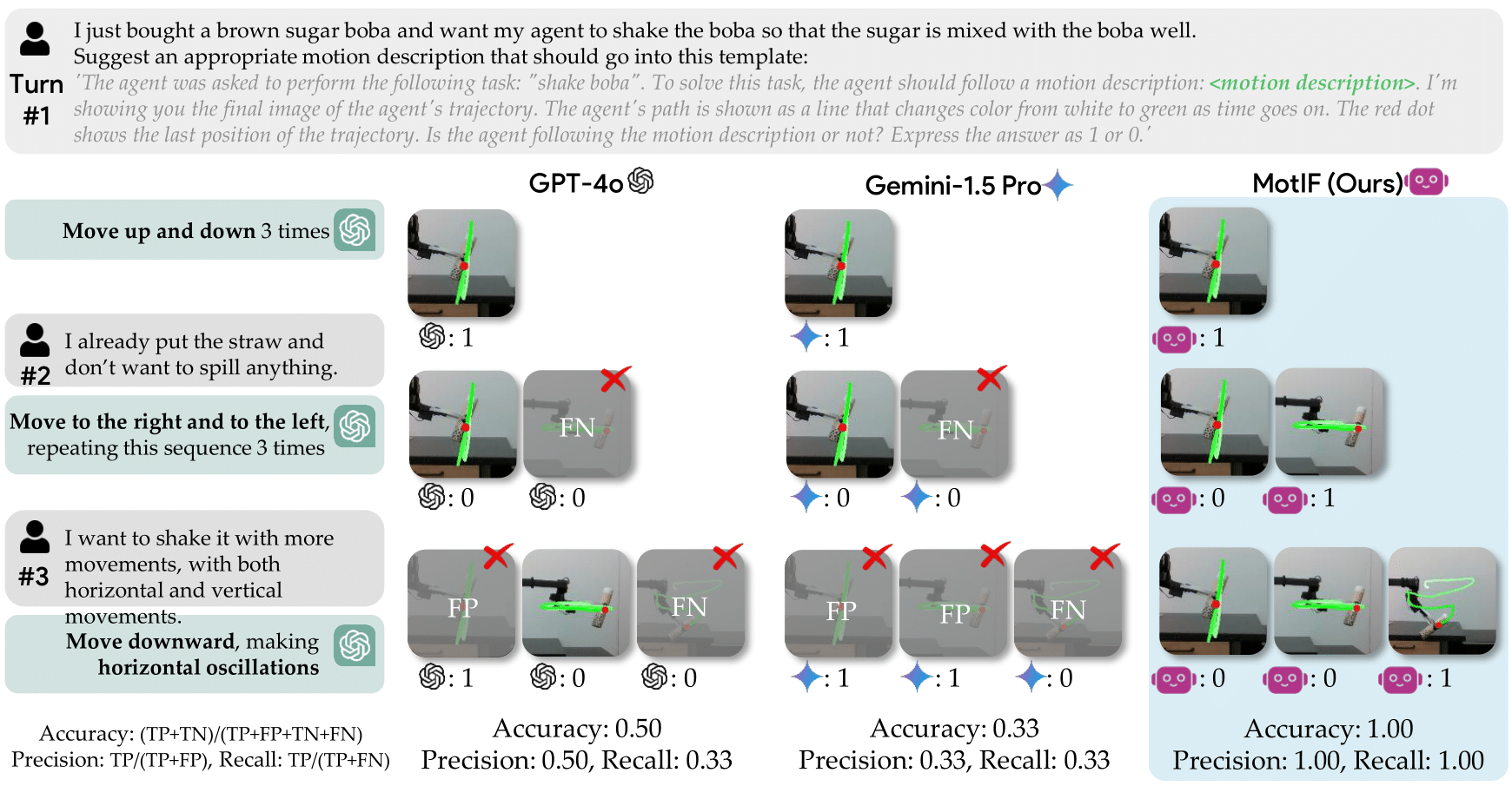

App. Fig. 10. Comparison between MotIF and state-of-the-art closed VLMs.

We compare three VLMs: our model, GPT-4o, and Gemini-1.5 Pro, along a conversation between a user and an LLM. The user specifies the task and the LLM generates an appropriate motion description. The performance of each VLM is measured by predicting if the robot motions align (VLM response: 1) with motion descriptions suggested from the LLM or not (VLM response: 0), where the images are not included in training our model. Comparing the accuracy, precision, and recall for each model, MotIF shows the highest performance in all metrics.

App. Fig. 10 shows a toy scenario comparing GPT-4o, Gemini-1.5 Pro, and MotIF, along a conversation between a user and an LLM (ChatGPT).

After three turns of conversation, we calculate the performance of motion discrimination using the VLMs. Results show that our model achieves the highest accuracy, precision, and recall, successfully understanding robotic motions in all cases.

Visualization of MotIF Outputs

"move in the shortest path"

"move to the right while making vertical oscillations"

"make a triangular motion clockwise"

"make a triangular motion clockwise"

➤ Human-Interactive Tasks

"brush hair"

"curl hair"

"style hair"

"take off hairband"

Human Demonstrations

➤ Object-Interactive Tasks

"pick and place"

"shake the coffee can"

"close the cabinet"

"shake pepper ??"

"shake the boba"

"erase whiteboard"

➤ Human-Interactive Tasks

"handover bottle"

"tidy hair"

"brush hair"

"handover bottle"

Motion Diversity

➤ "shake boba" human demonstrations

"make 4 circular motions clockwise"

"move to the right and to the left, repeating this sequence 3 times"

"move downward, while making horizontal oscillations"

"move upward and downward, repeating this sequence 3 times"

"rotate the object to the left, then reverse to the right, repeating this sequence 2 times"

➤ "deliver lemonade" robot demonstrations

"move in the shortest path"

"make a detour to the right of the manhole"

"make a detour to the left of the grass"

"move in the shortest path"

➤ "brush hair" robot demonstrations

"move downward"

"make 5 vertical strokes moving downward, increasing the starting height of each stroke"

"move downward while making horizontal oscillations"

"make 4 vertical strokes moving downward"

➤ "style hair" human demonstrations

"make 2 circular motions clockwise"

"make a circular motion counter-clockwise"

"move upward and to the right following a convex curve, then move downward"

"move downward and to the right"

Various Visual Motion Representations

Single Keypoint

Single Keypoint

Optical Flow

Optical Flow

2-frame Storyboard

2-frame Storyboard

4-frame Storyboard

4-frame Storyboard

Grounded Motion Annotations

Category

Task

Motion Description Examples

Demonstrations

Human

Robot

Non-Interactive

Outdoor Navigation

move in the shortest path

make a detour to the left and follow the walkway, avoiding moving over the grass

-

✔

Indoor Navigation

move in the shortest path

make a detour to the right of the long table, avoiding collision with chairs

-

✔

Draw Path

make a triangular motion clockwise

move upward and to the right

-

✔

Object-Interactive

Shake

move up and down 4 times

completely flip the object to the right and flip it back to its initial state

✔

✔

Pick and place

move downward and to the left

move downward while getting farther from <obstacle>, then move to the left

✔

✔

Stir

make 2 circular motions counter-clockwise

move upward, then move downward while making diagonal oscillations

✔

✔

Wipe

move to the right and move to the left, repeating this sequence 2 times

move to the right, making diagonal oscillations

✔

✔

Open/Close the cabinet

move to the right

move upward and to the left

✔

-

Spread Condiment

move to the left and to the right

move to the left while making back and forth oscillations

-

✔

User-Interactive

Handover

move upward and to the left

move downward and to the right following a concave curve

✔

-

Brush hair

move downward while making horizontal oscillations

make 5 strokes downward, increasing the starting height of each stroke

✔

✔

Tidy hair

move downward and to the right following a convex curve

make a circular motion clockwise, move upward, then move downward and to the right

✔

✔

Style hair

move to the right shortly, then move to the left following a concave curve

make a circular motion clockwise, gradually increasing the radius of the circle

✔

✔

App. Table 1. List of Tasks and Motion Descriptions. The collected dataset contains 653 human and 369 robot demonstrations

across 13 task categories, 36 task instructions, and 239 motion descriptions. Checkmarks denote which agent (human/robot)

demonstrations exist for each task. The table provides two motion description examples for each task.

Results

How does MotIF compare to state-of-the-art VLMs?

Fig. 7. Performance on MotIF-1K.

(a) shows that our models outperforms state-of-the-art (SOTA) off-the-shelf models in validation and test splits.

(b) We explore motion representations in terms of single-frame vs. multi-frame and the effectiveness of trajectory drawing. Single-frame with trajectory drawing demonstrates the highest recall in the test split, while other motion representations falter. Our approach identifies valid motions effectively and generalizes better than baselines.

App. Fig. 10. Comparison between MotIF and state-of-the-art closed VLMs.

We compare three VLMs: our model, GPT-4o, and Gemini-1.5 Pro, along a conversation between a user and an LLM. The user specifies the task and the LLM generates an appropriate motion description. The performance of each VLM is measured by predicting if the robot motions align (VLM response: 1) with motion descriptions suggested from the LLM or not (VLM response: 0), where the images are not included in training our model. Comparing the accuracy, precision, and recall for each model, MotIF shows the highest performance in all metrics.

App. Fig. 10 shows a toy scenario comparing GPT-4o, Gemini-1.5 Pro, and MotIF, along a conversation between a user and an LLM (ChatGPT).

After three turns of conversation, we calculate the performance of motion discrimination using the VLMs. Results show that our model achieves the highest accuracy, precision, and recall, successfully understanding robotic motions in all cases.

Visualization of MotIF Outputs

➤ Human-Interactive Tasks

"handover bottle"

"tidy hair"

"brush hair"

"handover bottle"

Motion Diversity

➤ "shake boba" human demonstrations

"make 4 circular motions clockwise"

"move to the right and to the left, repeating this sequence 3 times"

"move downward, while making horizontal oscillations"

"move upward and downward, repeating this sequence 3 times"

"rotate the object to the left, then reverse to the right, repeating this sequence 2 times"

➤ "deliver lemonade" robot demonstrations

"move in the shortest path"

"make a detour to the right of the manhole"

"make a detour to the left of the grass"

"move in the shortest path"

➤ "brush hair" robot demonstrations

"move downward"

"make 5 vertical strokes moving downward, increasing the starting height of each stroke"

"move downward while making horizontal oscillations"

"make 4 vertical strokes moving downward"

➤ "style hair" human demonstrations

"make 2 circular motions clockwise"

"make a circular motion counter-clockwise"

"move upward and to the right following a convex curve, then move downward"

"move downward and to the right"

Various Visual Motion Representations

Single Keypoint

Single Keypoint

Optical Flow

Optical Flow

2-frame Storyboard

2-frame Storyboard

4-frame Storyboard

4-frame Storyboard

Grounded Motion Annotations

Category

Task

Motion Description Examples

Demonstrations

Human

Robot

Non-Interactive

Outdoor Navigation

move in the shortest path

make a detour to the left and follow the walkway, avoiding moving over the grass

-

✔

Indoor Navigation

move in the shortest path

make a detour to the right of the long table, avoiding collision with chairs

-

✔

Draw Path

make a triangular motion clockwise

move upward and to the right

-

✔

Object-Interactive

Shake

move up and down 4 times

completely flip the object to the right and flip it back to its initial state

✔

✔

Pick and place

move downward and to the left

move downward while getting farther from <obstacle>, then move to the left

✔

✔

Stir

make 2 circular motions counter-clockwise

move upward, then move downward while making diagonal oscillations

✔

✔

Wipe

move to the right and move to the left, repeating this sequence 2 times

move to the right, making diagonal oscillations

✔

✔

Open/Close the cabinet

move to the right

move upward and to the left

✔

-

Spread Condiment

move to the left and to the right

move to the left while making back and forth oscillations

-

✔

User-Interactive

Handover

move upward and to the left

move downward and to the right following a concave curve

✔

-

Brush hair

move downward while making horizontal oscillations

make 5 strokes downward, increasing the starting height of each stroke

✔

✔

Tidy hair

move downward and to the right following a convex curve

make a circular motion clockwise, move upward, then move downward and to the right

✔

✔

Style hair

move to the right shortly, then move to the left following a concave curve

make a circular motion clockwise, gradually increasing the radius of the circle

✔

✔

App. Table 1. List of Tasks and Motion Descriptions. The collected dataset contains 653 human and 369 robot demonstrations

across 13 task categories, 36 task instructions, and 239 motion descriptions. Checkmarks denote which agent (human/robot)

demonstrations exist for each task. The table provides two motion description examples for each task.

Results

How does MotIF compare to state-of-the-art VLMs?

Fig. 7. Performance on MotIF-1K.

(a) shows that our models outperforms state-of-the-art (SOTA) off-the-shelf models in validation and test splits.

(b) We explore motion representations in terms of single-frame vs. multi-frame and the effectiveness of trajectory drawing. Single-frame with trajectory drawing demonstrates the highest recall in the test split, while other motion representations falter. Our approach identifies valid motions effectively and generalizes better than baselines.

App. Fig. 10. Comparison between MotIF and state-of-the-art closed VLMs.

We compare three VLMs: our model, GPT-4o, and Gemini-1.5 Pro, along a conversation between a user and an LLM. The user specifies the task and the LLM generates an appropriate motion description. The performance of each VLM is measured by predicting if the robot motions align (VLM response: 1) with motion descriptions suggested from the LLM or not (VLM response: 0), where the images are not included in training our model. Comparing the accuracy, precision, and recall for each model, MotIF shows the highest performance in all metrics.

App. Fig. 10 shows a toy scenario comparing GPT-4o, Gemini-1.5 Pro, and MotIF, along a conversation between a user and an LLM (ChatGPT).

After three turns of conversation, we calculate the performance of motion discrimination using the VLMs. Results show that our model achieves the highest accuracy, precision, and recall, successfully understanding robotic motions in all cases.

Visualization of MotIF Outputs

➤ "deliver lemonade" robot demonstrations

"move in the shortest path"

"make a detour to the right of the manhole"

"make a detour to the left of the grass"

"move in the shortest path"

➤ "brush hair" robot demonstrations

"move downward"

"make 5 vertical strokes moving downward, increasing the starting height of each stroke"

"move downward while making horizontal oscillations"

"make 4 vertical strokes moving downward"

➤ "style hair" human demonstrations

"make 2 circular motions clockwise"

"make a circular motion counter-clockwise"

"move upward and to the right following a convex curve, then move downward"

"move downward and to the right"

Various Visual Motion Representations

Single Keypoint

Single Keypoint

Optical Flow

Optical Flow

2-frame Storyboard

2-frame Storyboard

4-frame Storyboard

4-frame Storyboard

Grounded Motion Annotations

Category

Task

Motion Description Examples

Demonstrations

Human

Robot

Non-Interactive

Outdoor Navigation

move in the shortest path

make a detour to the left and follow the walkway, avoiding moving over the grass

-

✔

Indoor Navigation

move in the shortest path

make a detour to the right of the long table, avoiding collision with chairs

-

✔

Draw Path

make a triangular motion clockwise

move upward and to the right

-

✔

Object-Interactive

Shake

move up and down 4 times

completely flip the object to the right and flip it back to its initial state

✔

✔

Pick and place

move downward and to the left

move downward while getting farther from <obstacle>, then move to the left

✔

✔

Stir

make 2 circular motions counter-clockwise

move upward, then move downward while making diagonal oscillations

✔

✔

Wipe

move to the right and move to the left, repeating this sequence 2 times

move to the right, making diagonal oscillations

✔

✔

Open/Close the cabinet

move to the right

move upward and to the left

✔

-

Spread Condiment

move to the left and to the right

move to the left while making back and forth oscillations

-

✔

User-Interactive

Handover

move upward and to the left

move downward and to the right following a concave curve

✔

-

Brush hair

move downward while making horizontal oscillations

make 5 strokes downward, increasing the starting height of each stroke

✔

✔

Tidy hair

move downward and to the right following a convex curve

make a circular motion clockwise, move upward, then move downward and to the right

✔

✔

Style hair

move to the right shortly, then move to the left following a concave curve

make a circular motion clockwise, gradually increasing the radius of the circle

✔

✔

App. Table 1. List of Tasks and Motion Descriptions. The collected dataset contains 653 human and 369 robot demonstrations

across 13 task categories, 36 task instructions, and 239 motion descriptions. Checkmarks denote which agent (human/robot)

demonstrations exist for each task. The table provides two motion description examples for each task.

Results

How does MotIF compare to state-of-the-art VLMs?

Fig. 7. Performance on MotIF-1K.

(a) shows that our models outperforms state-of-the-art (SOTA) off-the-shelf models in validation and test splits.

(b) We explore motion representations in terms of single-frame vs. multi-frame and the effectiveness of trajectory drawing. Single-frame with trajectory drawing demonstrates the highest recall in the test split, while other motion representations falter. Our approach identifies valid motions effectively and generalizes better than baselines.

App. Fig. 10. Comparison between MotIF and state-of-the-art closed VLMs.

We compare three VLMs: our model, GPT-4o, and Gemini-1.5 Pro, along a conversation between a user and an LLM. The user specifies the task and the LLM generates an appropriate motion description. The performance of each VLM is measured by predicting if the robot motions align (VLM response: 1) with motion descriptions suggested from the LLM or not (VLM response: 0), where the images are not included in training our model. Comparing the accuracy, precision, and recall for each model, MotIF shows the highest performance in all metrics.

App. Fig. 10 shows a toy scenario comparing GPT-4o, Gemini-1.5 Pro, and MotIF, along a conversation between a user and an LLM (ChatGPT).

After three turns of conversation, we calculate the performance of motion discrimination using the VLMs. Results show that our model achieves the highest accuracy, precision, and recall, successfully understanding robotic motions in all cases.

Visualization of MotIF Outputs

➤ "style hair" human demonstrations

"make 2 circular motions clockwise"

"make a circular motion counter-clockwise"

"move upward and to the right following a convex curve, then move downward"

"move downward and to the right"

Various Visual Motion Representations

Single Keypoint

Single Keypoint

Optical Flow

Optical Flow

2-frame Storyboard

2-frame Storyboard

4-frame Storyboard

4-frame Storyboard

Grounded Motion Annotations

Category

Task

Motion Description Examples

Demonstrations

Human

Robot

Non-Interactive

Outdoor Navigation

move in the shortest path

make a detour to the left and follow the walkway, avoiding moving over the grass

-

✔

Indoor Navigation

move in the shortest path

make a detour to the right of the long table, avoiding collision with chairs

-

✔

Draw Path

make a triangular motion clockwise

move upward and to the right

-

✔

Object-Interactive

Shake

move up and down 4 times

completely flip the object to the right and flip it back to its initial state

✔

✔

Pick and place

move downward and to the left

move downward while getting farther from <obstacle>, then move to the left

✔

✔

Stir

make 2 circular motions counter-clockwise

move upward, then move downward while making diagonal oscillations

✔

✔

Wipe

move to the right and move to the left, repeating this sequence 2 times

move to the right, making diagonal oscillations

✔

✔

Open/Close the cabinet

move to the right

move upward and to the left

✔

-

Spread Condiment

move to the left and to the right

move to the left while making back and forth oscillations

-

✔

User-Interactive

Handover

move upward and to the left

move downward and to the right following a concave curve

✔

-

Brush hair

move downward while making horizontal oscillations

make 5 strokes downward, increasing the starting height of each stroke

✔

✔

Tidy hair

move downward and to the right following a convex curve

make a circular motion clockwise, move upward, then move downward and to the right

✔

✔

Style hair

move to the right shortly, then move to the left following a concave curve

make a circular motion clockwise, gradually increasing the radius of the circle

✔

✔

App. Table 1. List of Tasks and Motion Descriptions. The collected dataset contains 653 human and 369 robot demonstrations

across 13 task categories, 36 task instructions, and 239 motion descriptions. Checkmarks denote which agent (human/robot)

demonstrations exist for each task. The table provides two motion description examples for each task.

Results

How does MotIF compare to state-of-the-art VLMs?

Fig. 7. Performance on MotIF-1K.

(a) shows that our models outperforms state-of-the-art (SOTA) off-the-shelf models in validation and test splits.

(b) We explore motion representations in terms of single-frame vs. multi-frame and the effectiveness of trajectory drawing. Single-frame with trajectory drawing demonstrates the highest recall in the test split, while other motion representations falter. Our approach identifies valid motions effectively and generalizes better than baselines.

App. Fig. 10. Comparison between MotIF and state-of-the-art closed VLMs.

We compare three VLMs: our model, GPT-4o, and Gemini-1.5 Pro, along a conversation between a user and an LLM. The user specifies the task and the LLM generates an appropriate motion description. The performance of each VLM is measured by predicting if the robot motions align (VLM response: 1) with motion descriptions suggested from the LLM or not (VLM response: 0), where the images are not included in training our model. Comparing the accuracy, precision, and recall for each model, MotIF shows the highest performance in all metrics.

App. Fig. 10 shows a toy scenario comparing GPT-4o, Gemini-1.5 Pro, and MotIF, along a conversation between a user and an LLM (ChatGPT).

After three turns of conversation, we calculate the performance of motion discrimination using the VLMs. Results show that our model achieves the highest accuracy, precision, and recall, successfully understanding robotic motions in all cases.

Visualization of MotIF Outputs

Grounded Motion Annotations

| Category | Task | Motion Description Examples | Demonstrations | |

|---|---|---|---|---|

| Human | Robot | |||

| Non-Interactive | Outdoor Navigation | move in the shortest path make a detour to the left and follow the walkway, avoiding moving over the grass |

- | ✔ |

| Indoor Navigation | move in the shortest path make a detour to the right of the long table, avoiding collision with chairs |

- | ✔ | |

| Draw Path | make a triangular motion clockwise move upward and to the right |

- | ✔ | |

| Object-Interactive | Shake | move up and down 4 times completely flip the object to the right and flip it back to its initial state |

✔ | ✔ |

| Pick and place | move downward and to the left move downward while getting farther from <obstacle>, then move to the left |

✔ | ✔ | |

| Stir | make 2 circular motions counter-clockwise move upward, then move downward while making diagonal oscillations |

✔ | ✔ | |

| Wipe | move to the right and move to the left, repeating this sequence 2 times move to the right, making diagonal oscillations |

✔ | ✔ | |

| Open/Close the cabinet | move to the right move upward and to the left |

✔ | - | |

| Spread Condiment | move to the left and to the right move to the left while making back and forth oscillations |

- | ✔ | |

| User-Interactive | Handover | move upward and to the left move downward and to the right following a concave curve |

✔ | - |

| Brush hair | move downward while making horizontal oscillations make 5 strokes downward, increasing the starting height of each stroke |

✔ | ✔ | |

| Tidy hair | move downward and to the right following a convex curve make a circular motion clockwise, move upward, then move downward and to the right |

✔ | ✔ | |

| Style hair | move to the right shortly, then move to the left following a concave curve make a circular motion clockwise, gradually increasing the radius of the circle |

✔ | ✔ | |

App. Table 1. List of Tasks and Motion Descriptions. The collected dataset contains 653 human and 369 robot demonstrations across 13 task categories, 36 task instructions, and 239 motion descriptions. Checkmarks denote which agent (human/robot) demonstrations exist for each task. The table provides two motion description examples for each task.

Results

How does MotIF compare to state-of-the-art VLMs?

Fig. 7. Performance on MotIF-1K. (a) shows that our models outperforms state-of-the-art (SOTA) off-the-shelf models in validation and test splits. (b) We explore motion representations in terms of single-frame vs. multi-frame and the effectiveness of trajectory drawing. Single-frame with trajectory drawing demonstrates the highest recall in the test split, while other motion representations falter. Our approach identifies valid motions effectively and generalizes better than baselines.

App. Fig. 10. Comparison between MotIF and state-of-the-art closed VLMs. We compare three VLMs: our model, GPT-4o, and Gemini-1.5 Pro, along a conversation between a user and an LLM. The user specifies the task and the LLM generates an appropriate motion description. The performance of each VLM is measured by predicting if the robot motions align (VLM response: 1) with motion descriptions suggested from the LLM or not (VLM response: 0), where the images are not included in training our model. Comparing the accuracy, precision, and recall for each model, MotIF shows the highest performance in all metrics.

App. Fig. 10 shows a toy scenario comparing GPT-4o, Gemini-1.5 Pro, and MotIF, along a conversation between a user and an LLM (ChatGPT). After three turns of conversation, we calculate the performance of motion discrimination using the VLMs. Results show that our model achieves the highest accuracy, precision, and recall, successfully understanding robotic motions in all cases.

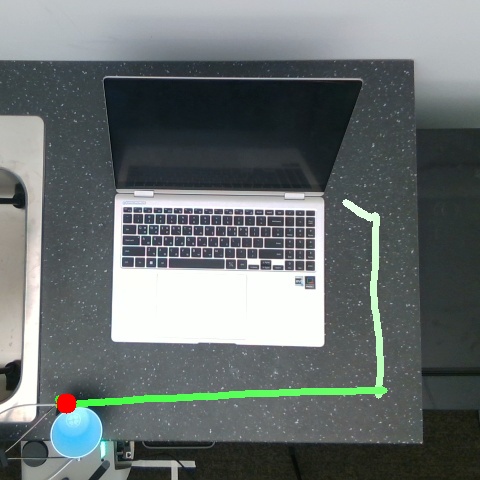

➤ While motion description "move downward, then move to the left" is included in the training data, our VLM generalizes to understanding unseen motion descriptions (row 2-4 in table).

| Motion Description | MotIF Output | Prediction Correctness |

|---|---|---|

| move downward, then move to the left | 1 | ✔ |

| move farther from the laptop, move downward, then move to the left | 1 | ✔ |

| move downward and to the left, passing over the laptop | 0 | ✔ |

| move over the laptop | 0 | ✔ |

➤ The following trajectory visualizes a motion in an unseen camera viewpoint. Although the training data only contain front view images for this task, our model effectively understands the robot's motion in an unseen camera viewpoint (topdown view).

| Motion Description | MotIF Output | Prediction Correctness |

|---|---|---|

| move downward, while making horizontal oscillations | 1 | ✔ |

| move downward, while making side-to-side movements | 1 | ✔ |

| move downward | 0 | ✔ |

| move downward, while making vertical oscillations | 0 | ✔ |

➤ The following trajectory visualizes a motion with an unseen object. While the training data only contains motions of spreading condiments on pizza with parmesan cheese, our model effectively understands the robot's motion with an unseen object, parsley, accurately determining ground truth and aligning paraphrased motion descriptions with the robot's motion.

| Motion Description | MotIF Output | Prediction Correctness |

|---|---|---|

| move to the left, while making vertical oscillations and alternating rotations | 1 | ✔ |

| move to the left, while making vertical oscillations | 1 | ✔ |

| move to the left, while making vertical shaking movements | 1 | ✔ |

| move to the left in a straight line | 0 | ✔ |

➤ The following trajectory visualizes an unseen grounded motion. Our model effectively understands the semantic grounding of the robot's motion in a navigation task, such as moving over or making a detour to avoid a specific instance (e.g., manhole) in the environment.

| Motion Description | MotIF Output | Prediction Correctness |

|---|---|---|

| make a detour to the right of the manhole | 1 | ✔ |

| move forward, making a detour to the right of the manhole | 1 | ✔ |

| move forward in the shortest path | 0 | ✔ |

| move forward in a straight line, moving over the manhole | 0 | ✔ |

Qualitative Analysis on Off-the-shelf VLMs

Example Trajectory of a robot brushing hair. The robot is brushing the hair by moving downward, while making horizontal oscillations.

To assess the motion understanding capabilities of off-the-shelf VLMs, we conducted an experiment where GPT-4V and GPT-4o were asked to describe a robot's motion from a video of it brushing hair. Although GPT-4o generated a detailed response by extracting keyframes and detecting the trajectory, it ultimately failed to describe the specific shape of the motion or how it was grounded in the scene.

The model is given a video of the robot's motion with the question prompt. The video does not include any trajectory visualization. Given the video, the model fails to describe the semantic meaning of the robot's motion.

Describe the robot's motion.

# GPT-4V Answer

It seems you've uploaded a video file related to the robot's motion. Let's analyze the content of this video to describe the motion in detail. I'll review the video and provide a description of the robot's movements. Please hold on for a moment.

The video is ready for viewing. Please watch the robot's motion in the video above. After viewing, I can help with any specific analysis or description you need about the robot's movements. If you have specific aspects of the motion you're interested in, such as speed, trajectory, or interaction with objects, let me know!

# GPT-4o Answer

The first frame of the video shows the side view of a robot performing a task. To describe the robot's motion, I'll need to analyze the frames in the video to identify the movement pattern. I'll extract and analyze key frames to provide a detailed description of the motion.

Let's proceed by extracting key frames and tracking the movement of the robot's end effector.

The extracted key frames from the video show the robot's motion over time. To provide a detailed description of the robot's motion, let's analyze these frames:

The robot's end effector appears to be moving in a specific path throughout the video.

The trajectory includes several distinct positions and orientations at different times.

To describe the motion more precisely, I will track the movement of the robot's end effector across these frames. This will help in visualizing and understanding the pattern of movement.

The tracked positions of the robot's end effector across the frames indicate its motion pattern. Here is the detailed description of the robot's motion:

Initial Position: The robot starts with the end effector near the top left quadrant of the frame.

Motion Path: The end effector follows a somewhat curved path, moving diagonally towards the bottom right quadrant.

Key Positions:

Frame 1: (146, 166)

Frame 2: (174, 173)

Frame 3: (303, 221)

Frame 4: (260, 236)

Frame 5: (296, 245)

Frame 6: (265, 225)

Frame 7: (300, 266)

Frame 8: (245, 282)

Frame 9: (159, 258)

Frame 10: (289, 319)

Frame 11: (262, 353)

Frame 12: (318, 336)

Overall Trajectory: The robot's end effector moves in a non-linear path with variations in both the x and y directions, suggesting a complex motion.

The movement is likely part of a task requiring precise positioning and orientation changes. If you have any specific aspects of the motion or additional details you'd like to focus on, please let me know!

The model is given a video of the robot's motion with the robot's trajectory overlaid, and the question prompt.

I've loaded the video of the robot's motion for the task of brushing hair. To provide a detailed description of the motion, I will analyze the video and describe the path and color change over time, along with the final position indicated by the red dot.

Let's start by extracting key frames and analyzing the motion trajectory.

<12x1 storyboard displayed>

The key frames extracted from the video provide a visual representation of the robot's motion for the task of brushing hair. Here's a detailed description of the motion:

Initial Position: The robot starts with the brush positioned near the top of the hair, as indicated by the white color at the beginning of the trajectory.

Motion Path: The robot moves the brush in a downward stroke, following a smooth and continuous path. The color of the trajectory gradually changes from white to green, indicating the progression of time.

Path Continuation: The robot completes the first downward stroke and moves the brush back up to the starting position, preparing for another stroke. The color of the line continues to change from white to green, showing the ongoing motion.

Red Dot: The red dot at the end of the trajectory indicates the last position of the brush at the end of the video. This dot signifies the completion of the brushing motion.

Overall, the robot's motion is characterized by a repetitive up-and-down brushing movement, with the color-changing line visualizing the time progression of the motion. The red dot marks the final position of the brush.

BibTeX

@article{hwang2025motif,

title={MotIF: Motion Instruction Fine-tuning},

author={Hwang, Minyoung and Hejna, Joey and Sadigh, Dorsa and Bisk, Yonatan},

journal={IEEE Robotics and Automation Letters},

year={2025},

publisher={IEEE}

}